Affordable Situational Awareness for Everyone

Robert A. Pilgrim, PhD1, Hughie L. “Lee” Gay, Jr.1, Roy C. Brow, III1, Glenn Hembree1, Joseph P. Riolo2

1Bevilacqua Research Corporation, Huntsville, AL

2Program Executive Office Ground Combat Systems, Detroit Arsenal, MI

The primary goal of the 360-Degree Situational Awareness Sensor System (SASS-360) effort is to provide the Program Executive Office Ground Combat Systems (PEOGCS) with an affordable situational awareness capability in the immediate surroundings of ground combat and combat support platforms. The proliferation of low-cost high-performance sensors and processors in the commercial market opens an opportunity to achieve affordable situational awareness for a broader range of vehicles. Issues commonly encountered when employing commercial-off-the-shelf (COTS) components in the development of military systems are discussed along with the various approaches for mitigation. In particular the use of Artificial Intelligence and Machine Learning (AI/ML) based innovations as an alternative approach to achieving functional performance through hardware specialization. An argument is made for defining systems-level performance requirements rather than setting specific hardware capabilities, so that, as yet unknown innovations which can achieve superior performance are not rejected simply because they do not include pre-specified and costly high-performance hardware components. It is the conclusion of this paper that the advantage of using COTS outweighs the disadvantages particularly in the ability to leverage the short life cycles of COTS components and allowing the continuing advances in AI processing to be used in otherwise long life-cycle military technologies with fixed functionality.

1. INTRODUCTION

The proliferation of small, affordable, Commercial-Off-The-Shelf (COTS) imagers in the visible, near infrared (VIS/NIR) and thermal passbands and embedded GPU processors offers the potential for an innovative solution in which the imager hardware being used is expendable and field-replaceable. The availability of these devices is largely the result of consumer demand for smartphones, handhelds, and home security systems. While not suitable for some military applications, such as fire-control systems, the capability of these devices is ideally suited to the functional range and resolution requirements of the basic Situational Awareness (SA) mission. Concurrent with the development of the COTS imager and processor hardware, there continues to be rapid and significant advances in machine vision software tools for multiple-camera integrated panoramic surveillance, feature recognition, tracking and image enhancement. Many of these innovative solutions are based on Artificial Intelligence and Machine Learning (AI/ML) tools developed in the open-source software community and are currently being adapted for many military applications.[1][2]

2. BASIC SA SYSTEM FUNCTIONS

Bevilacqua Research Corporation (BRC) is currently under contract developing an affordable COTS 360-degree Situational Awareness Sensor System (SASS). The SASS 360 SA system is comprised of low-cost visible and thermal cameras, paired with open-source stitching software. The SASS 360 SA supports all the functions for basic SA for the armored vehicle crew. The SASS 360 Maintains crew orientation while providing a full view of the immediate area (3 to 100 meters range) surrounding the vehicle to support vehicle perimeter security, to view and alert for soldiers/personnel in vehicle danger areas, and to assist the driver with look-ahead obstacles and rollover hazards.

The system provides the vehicle commander, driver and other crew members the ability to persistently monitor the full 360-degree panoramic view while simultaneously permitting selectable and independent front, rear and side views. Crew members can independently zoom in on any selected individual camera view, while integrating the thermal camera with the visible imagery.

The SASS 360 software supports a rapid setup time, providing automated scene-stitched video in a modular and scalable system capable of adapting to a variety of camera types, processors and displays. This system integrates and registers visible and thermal imagery of diverse resolutions and fields of regard as available in the SASS sensor suite. As the sensors provide overlapping coverage, the system can continue to operate with missing components. As cameras go offline due to damage or loss, the functionality degrades gracefully while maintaining mission capability for the crew at a reduced level.

The SASS 360 system can be implemented in multiple configurations. If a reduced capability is needed, say a system to provide the driver and crew situational awareness when backing up, the number of cameras and displays can be reduced; thus, providing the required capability at a lower cost yet retaining the inherent software capabilities to rapidly add additional functionality as required. The SASS 360 system can also be deployed as an affordable low-cost complete system (cameras, software, AI, compositing and processors) to provide situational awareness and hazard detection to legacy platform crew as a lower cost option for combat support and combat service support armored vehicles.

Finally, given the open architecture approach utilized in the SASS 360 system, select capabilities can be deployed (hosted) as functional subsystems within a larger system on either a SASS 360 processor or other processor(s) and utilizing other sensors already present on the platform, allowing existing vehicle weapon systems to engage near pop-up threats identified via the 360-degree SA. This modular approach is an important part of effectively using COTS hardware and software discussed in detail in Section 4.

3. SASS 360 PROCESSING

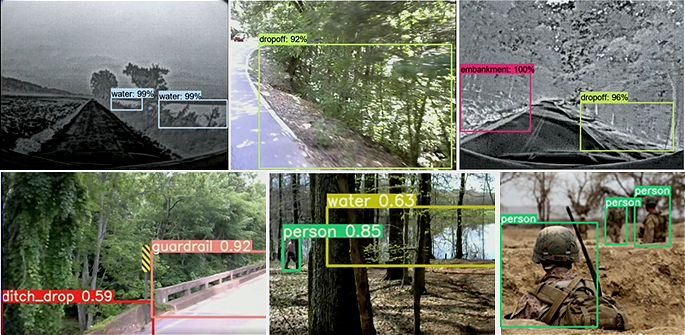

The SASS 360 system currently performs detection and reporting against multiple hazard/object classes as shown in Figure 1, using video streams from the SASS 360 system’s visible and thermal cameras. These classes can be expanded as needed for use in various Areas of Responsibility (AOR).

Figure 1: SASS 360 Obstacle, Hazard and Person Detector Examples

Expansion (addition) of classes will require dataset expansion and retraining/tailoring of the AI module. Not all object classes need to be displayed or reported. Contraction of classes can be accomplished by changing the AI module commands so it does not report the classes; thus, eliminating the need for retraining.

The bounding boxes and object type tags are not typically displayed directly to the crew member. Rather various methods of alerting the crew to the classes of objects and hazards are used that provide sufficient information without overloading the viewer or impacting their ability to perform their primary mission. Since these alerts are meta-data separate from the images, they can be monitored and manipulated to provide the appropriate alerts and cues to both personnel and digital agents.

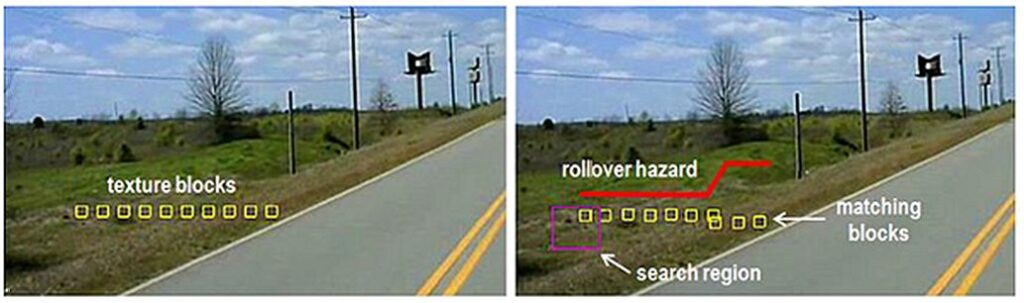

Rather than relying on a purely AI approach, the SASS 360 considers the input from multiple intelligent agents, each designed to detect and report the presence or absence of specific elements in the scene. In order to verify obstacles and hazards such as embankments and drop-offs the SASS 360 incorporates a look-ahead compositing method to achieve a measure of the terrain and a perception of depth without special-purpose processors or displays. This method is based on geometric analysis using overlapping images from multiple COTS cameras and multiple frames from a single camera when the platform is moving.

When employing these geometry-based techniques, it is necessary to include specific information about the camera field-of-view and the vehicle kinematics. These data allow the software to apply a version of optical flow analysis to convert the separation between matching patches of texture into line-of-sight ranges.

Figure 2 shows an example of detecting drop-off (or rollover hazards) by noting the change in position between successive frames of video as viewed from a moving vehicle. Once the matching texture blocks are registered in the second video frame the amount of motion and knowledge of the camera Field of View (FOV) and line-of-sight are used to geometrically extract estimates of range. The details of the sensor and the dynamics of the vehicle also help to reduce the amount of computation necessary to perform the optical flow calculations by allowing for a smaller search region to find the matching texture blocks. The SASS 360 software achieves real-time performance (i.e. the processing time is less than the frame time of the video).

Figure 2: Temporal Parallax Depth Perception Exposing a Hidden Rollover Hazard

In addition to driver assistance this system also supports other crew operations such as surveillance and threat detection in overwatch or silent watch by generating alerts in selectable regions of interest while reducing crew workload and fatigue. Combining the AI object detection with standard machine vision functions like Moving Target Indication (MTI) reduces false alarms. Once processed, these tags and flags are presented to the appropriate crew members. Look-ahead compositing of geometric and AI data, means that obstacles and threats can be identified and located at some distance from the vehicle and then presented to the crew at the appropriate time.

One of the unique methods demonstrated in the SASS program is the ability to extract depth information from video images without the need for radar, Light Detection And Ranging (LiDAR) or other active (radiation emitting) sensing and without the cost of adding 3D depth perception to system imagers, processors and displays. This completely passive mode of operation is a unique requirement for military applications for low-observable operations.

The combination of object identification with geometric depth perception has an additional benefit of being able to “look-ahead” and to project the most likely path of other vehicles and to anticipate hazardous situations. In this regard the SASS 360 system incorporates a simple form of look-ahead frame prediction in which the location of moving and static objects can be accurately predicted for some future (100-200 milliseconds) time.[3]

4. USING COTS COMPONENTS

Some argue there is a “dark side” to the use of COTS that must be addressed in order to make effective use of these affordable and highly effective components.[4] This issue centers on the military need for long system development and maintenance cycles compared to the COTS 18-month life cycle for typical for digital technology consumer products. While challenging these issues are being dealt with in a number of ways that can be summarized by agile processes, adaptable and modular systems design and the adoption of systems level performance requirements rather than hardware level specifications.

One of the SASS 360 program baseline requirements is for the system to be hardware agnostic. This applies to both the cameras and the processors. To that end SASS 360 has been demonstrated using a wide variety of video imagers including basic web cams (e.g. Logitech C270), the Arducam Mini, and the Sierra Olympic (Tamarisk) thermal cameras. The outputs of the video cameras are processed as separate frames so it will operate on any format imager with a compatible COde DECode (CODEC).

Making the SASS 360 system processor agnostic was more challenging. With the rapidly evolving computer hardware it is essential that the software should not take advantage of some unique feature unavailable on the standard embedded system. To support the objective of modularity, commercial standards are used such as Real-Time Streaming Protocol (RTSP), Web Real-Time Communication (WebRTC), and the widely used video compression format, H264.

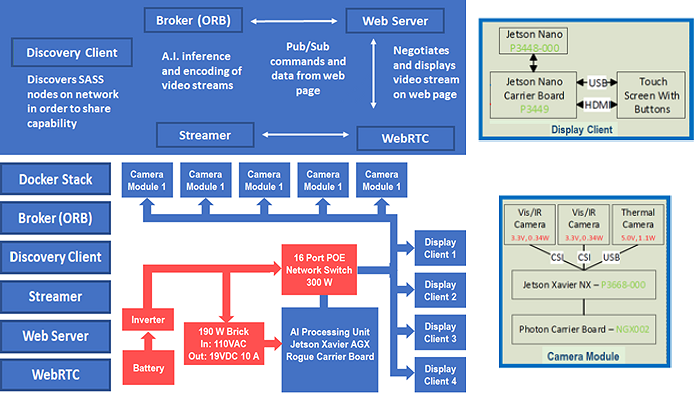

In order to ensure compatibility with the widest range of processors, the SASS 360 is based on Docker technology, these Docker Nodes are capable of running on any level of processor from Raspberry Pi up to rack-mounted, clustered systems, making them scalable with zero modification. Shown diagrammatically in Figure 3, the Discovery Client (left side) allows nodes to locate other systems and capabilities on a network. The Browser-based client allows viewing on standard computers, cell phones, and tablets. The modular stand-alone design allows for creation, testing and deployment without or reconfiguring or rebuilding the docker stack.

Figure 3: The SASS Node and Camera/Processor Design.

The Display clients (right side) are the main end-user interface to the SASS 360 system. They consist of a Jetson Nano System On Module (SOM) and a ruggedized display with buttons. The display units are responsible for providing a web browser for the user to access and control the system. The buttons on the display are the primary method to manipulate the controls and adjust the views for the user’s preference. The Touch screen capability is available for alternative ways of accessing the same functions.

By utilizing industry standards such as Universal Serial Bus (USB) and High-Definition Multimedia Interface (HDMI), the display client can be adjusted to accommodate a wide variety of displays and input devices. Finally the Stand-alone node protects the system in the event of malfunctioning hardware.

At the time of this writing BRC has just completed the second demonstration of the SASS 360 system. The laboratory setup for displays and multiple sensor camera units used in this demonstration are shown in Figure 4.

Figure 4: SASS 360 Situational Laboratory Displays and Example Multi-Camera/Processor Box

Adaptability is the key to the effective use of COTS hardware components in programs that require a long-life cycle. The government should not expect the commercial vendor to maintain large inventories of obsolete hardware, but they can work with suppliers to ensure that updated components are SWAP compatible with earlier models whether or not the government chooses to take advantage of the higher performance and new features provided in the latest models.

The use and management of COTS software also has its challenges. According to the U.S. Computer Emergency Readiness Team (US-CERT), the problem with COTS software lies in treating it as a “black box”.[5] This issue is mitigated through access to and use of source code rather than binaries for open-source software components. This is another advantage to the use of Docker Containers ensuring validated software can be ported to new processor hardware without the need to return to a public repository for processor specific software to maintain compatibility with the new hardware.

SASS 360 SA software is designed to be modular and extensible by utilizing commercial software industry best practices, including Docker containers (lightweight, standalone, executable package of software that includes everything needed to run an application), Kubernetes (open-source system for automating deployment, scaling, and management of containerized applications), and Object Request Broker (ORBs) middleware that allows program calls to be made from one computer to another via a computer network, providing location transparency through remote procedure calls. For battlefield operations, the SASS 360 software run in stand-alone mode (i.e. completely within the vehicle without outside data connections). However, the SASS modular design through the ORB permits trainers or other remote viewers to log into vehicles to live access to the same SA view as that provided to the crew. Thus, allowing a trainer/supervisor can observe to advise crew members in real-time. Note, this would require the addition of an IP capable radio or a 5th Generation (5G) cell connection.

The modular design also allows the software to be as hardware agnostic as possible supporting changes or extensions to the system configuration minimal rebuilding. Moreover, this approach enables systems to be built by piecing together objects from different vendors, thus providing flexibility in vendor selection and maintaining an open architecture.

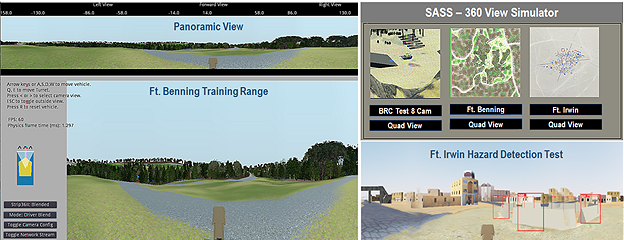

The SASS 360 Simulator shown in Figure 5 is a 3D first-person view simulator built using the Godot Open Architecture Integrated Development Environment (IDE).

Figure 5: Selected Features of the SASS 360 Simulator

Due to the shorter product life cycles of COTS components, it is important to be able to frequently perform comparison tests to ensure proper operation of new configurations and versions replacing earlier implementations. The BRC SASS 360 Simulator supports such comparative tests as well as performing a number of other actions important to maintain and apply the SASS 360 software to multiple platforms depicting scenes from the Georgia Ft. Benning Training Range (driver and panoramic views) and Ft. Irwin in California (AI Hazard Detection Test).

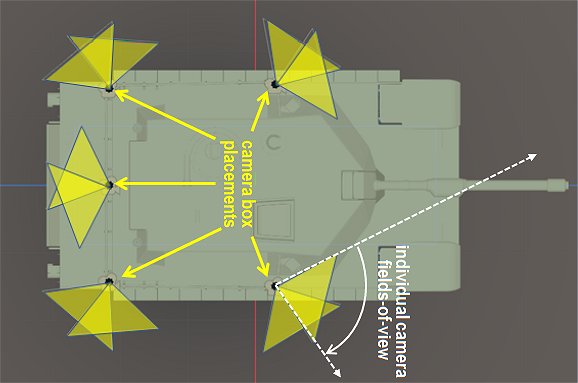

Some of the other features of the SASS 360 Simulator include the modeling of terrain elevation and vehicle body dynamics to produce realistic rollover hazards, the ability to model camera-processor-display latencies to test effects on drivers, and communication channels to display real-world SASS video in the simulator and have the simulator generate simulated video for offline software testing. This capability makes the comparison of performance for alternate configurations and updated imager, processor or display components feasible. When outfitting a new vehicle, the SASS 360 Simulator permits the user to test full SA coverage for different camera placements as shown in Figure 6.

Figure 6: SASS 360 Simulator Camera Placement and SA Coverage Test

This feature enables the user to see the effects of camera position, orientation and lens selected lens systems in both a coverage map and first-person (in the vehicle) views.

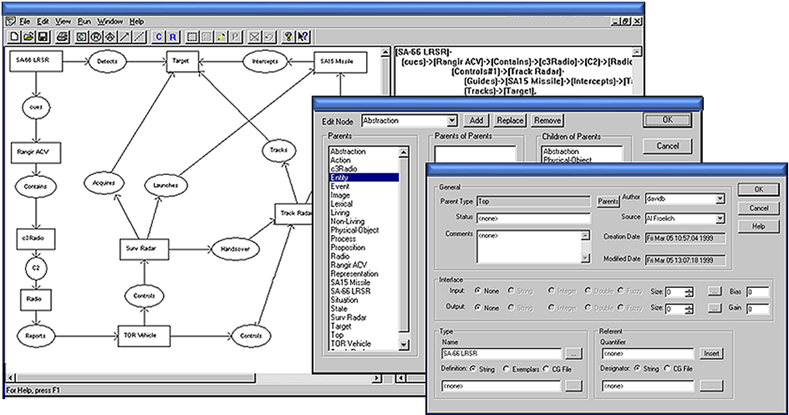

In order to maintain effective control and to extract the most from the COTS software components, BRC monitors the outputs of the various AI and machine vision elements using the Cognitive Object Reasoning Engine (CORE) tool for building knowledge graphs as shown in Figure 7. Using a particular type of knowledge graph known as a Conceptual Graph (CG) the entity relationships between these software agents are captured and represented as “is-a” relationships.

Figure 7: CORE Conceptual Graph Knowledgebase Editor

When machine learning is used to extract knowledge from data, conceptual graphs help verify entity relationships for known models. Conversely when one or more CG monitoring agents detects an entity value out of range or an unrecognized pattern, the anomaly can be reported and handled. The particular actions dealing with anomalies depends on the application. The the information passed along to the human operator is on optimal alert or warning with minimal false alarms.

While some intelligent monitoring agents are unique to a particular application, it is most useful to include monitors to enforce fundamental rules that apply to all situations. These universal agents are based on laws of physics, or rules of logic or geometry. When different monitoring agents return conflicting or incompatible values the CG resolves the anomaly. Such an agent uses unsupervised learning to establish nominal behavior and then reports deviations from this baseline.[7] Anomaly detection is not only useful for improving performance and reducing false alarms in hazard alerts, is also a valuable tool for the early detection of software software failures due to undetected programming errors and even the presence of cyber attacks.

Unfortunately this is a problem not limited to open-source COTS software. Whether public or custom made, there is no such thing as totally error-free code or unhackable software. Cyber threats are a continuing problem that must be anticipated and dealt with effectively. The number of vulnerabilities per year in both commercial vendor and open-source software continues to increase from around 1000 per year in 2000 to over 15,000 per year in 2019.[6] It is unrealistic to assume any software can be made absolutely secure. The best approach to maintaining secure software is to stay resilient and maintain cyber awareness, through close cooperation with science and technology organizations with expertise in cyberspace risk management. In this regard judicious use of COTS software has the advantage of “many eyes”. For military applications and software for National Critical Infrastructure (NCI) building systems from COTS source code combined with application specific software, rather than binary (executable) files significantly reduces the risk. The SASS 360 Simulator adds an additional layer of security by permitting the testing in an air-gapped environment before transfer to a fielded system.

5. SUMMARY & RECOMMENDATIONS

It is anticipated that innovative software solutions and methods will continue to replace special-purpose hardware solutions in future SA systems.

For example, an issue for any SA system is the delay in the display of the surrounding scene from the real-time direct observation. This issue is less critical for an SA system providing basic functionality than for a system that also support fire-control functions. In either case recent advances in the performance of AI methods such as video frame prediction suggest that the state-of-the-art is very close to being able to use software to completely negate hardware latency sufficiently to meet any well-defined performance requirements. Whether or not one chooses to consider such processing in the SA stack, it seems clear that these advanced AI-based solutions will be increasingly important contributors to SA system capabilities.

It is recommended that requirements be based on functional performance rather than on the specific characteristics of the hardware components. Setting systems requirements for SA will ensure that functional performance is achieved while encouraging, rather than constraining, innovations.

6. REFERENCES

[1] Atherton, Kelsey D., “How Silicon Valley is Helping the Pentagon Automate Finding Targets”, Military.com Jan 14, 2022.

[2] Han, COL Sang, D., “Delivering Capabilities for 2020 and Beyond”, The JAIC Feb 13, 2020.

[3]Kwon, Y. H., and M. G. Park, “Predicting Future Frames Using Retrospective Cycle GAN,” 2019 IEEE/ CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019, pp. 1811-1820.

[4] Cole, S., “Managing COTS obsolescence for military systems”, Military Embedded Systems, Sept. 12, 2016.

[5] Miller, C., Security Considerations in Managing COTS Software”, Cybersecurity & Infrastructure Security Agency, May 14, 2013.

[6]Ozkan, B. E., S. Bulkan, “Hidden Risks to Cyber Security from Obsolete COTS Software”, 2019 11th International Conference on Cyber Conflict: Silent Battle, 2019.

[7]Pilgrim, R. A., and A. T. Bevilacqua, “Toward Contextual AI/ML”, Computational Science & Computational Intelligence, Springer Nature Book Series, accepted for publication July 2021.

Paper accepted for presentation at:

2022 NDIA MICHIGAN CHAPTER

GROUND VEHICLE SYSTEMS ENGINEERING

and TECHNOLOGY SYMPOSIUM

<Vehicle Electronics & Architecture> Technical Session (cancelled)

August 16-18, 2022 – Novi, Michigan